by jodi

Douglas Knox touches on the future of “distant reading” ((What’s “distant reading”? Think “text mining of literature”–but it’s deeper than that. It’s also called the macroeconomics of literature (“macroanalysis”) and Hathi Trust taking?))

For rights management reasons and also for material engineering reasons, the research architecture will move the computation to the data. That is, the vision of the future here is not one in which major data providers give access to data in big downloadable chunks for reuse and querying in other contexts, but one in which researchers’ queries are somehow formalized in code that the data provider’s servers will run on the researcher’s behalf, presumably also producing economically sized result sets.

There are also some implicit research goals, for those in cyberinfrastructure, digital humanities support, and people in text mining aiming at supporting humanities scholars:

Whatever we mean by “computation,” that is, can’t be locked up in an interface that tightly binds computation and data. Readers already need (and for the most part do not have) our own agents and our own data, our own algorithms for testing, validating, calibrating, and recording our interaction with the black boxes of external infrastructure.

This kind of blackbox infrastructure contrasts with “using technology critically and experimentally, fiddling with knobs to see what happens, and adjusting based on what they find.” when a scholar is “free to write short scripts and see results in quick cycles of exploration”.

I’m pulling these out of context — from Douglas’ post on the Digital Humanities 2011 conference.

Tags: dh11, digital humanities, distant reading, Google Books, macroanalysis

Posted in books and reading, information ecosystem | Comments (0)

by jodi

I’ve been slow in blogging about the Web Science Summer School being held in Galway this week. Check Clare Hooper’s blog for more reactions (starting from her day one post from two days ago).

Wednesday was full of deep and useful talks, but I have to start at the beginning, so I had to wait for a copy of Stefan Decker’s slides.

Hidden in the orientation to DERI, there are a few slides (12-19) which will be new to DERIans. They’re based on an argument Stefan made to the database community recently: any data format enabling the data Web is “more or less” isomorphic to RDF.

The argument goes:

The three enablers for the (document) Web were:

- scalability

- no censorship

- a positive feedback loop (exploiting Metcalf’s Law) ((The value of a communication network is proportional to the number of connections between nodes, or n^2 for n nodes)).

Take these as requirements for the data Web. Enabling Metcalf’s Law, according to Stefan, requires:

- Global Object Identity.

- Composability: The value of data can be increased if it can be combined with other data.

The bulk of his argument focuses on this composability feature. What sort of data format allows composability?

It should:

- Have no schema.

- Be self-describing.

- Be “object centric”. In order to integrate information about different entities data must be related to these entities.

- Be graph-based, because object-centric data sources, when composed, results in a graph, in the general case.

Stefan’s claim is that any data format that fulfills the requirements is “more or less” isomorphic to RDF.

Several parts of this argument confuse me. First, it’s not clear to me that a positive feedback loop is the same as exploiting Metcalf’s Law. Second, can’t information can be composed even when it is not object-centric? (Is it obvious that entities are required, in general?) Third, I vaguely understand that composing object-centric data sources results in a (possibly disjoint) graph: but are graphs the only/best way to think about this? Further, how can I convince myself about this (presumably obvious) fact about data integration.

Tags: composability, data integration, databases, graph formats, Metcalf's Law, RDF, Semantic Web, Web of data, WWW

Posted in information ecosystem, semantic web | Comments (1)

by jodi

The long-term freedom of the Internet may depend, in part, on convincing the big players of the content industry to modernize their business models.

Motivated by “protecting” the content industry, the U.S. Congress is discussing proposed legislation that could be used to seize domain names and force websites (even search engines) to remove links.

Congress doesn’t yet understand that there are already safe and effective ways to counter piracy — which don’t threaten Internet freedom. “Piracy happens not because it is cheaper, but because it is more convenient,” as Arvind Narayanan reports, musing on a conversation with Congresswoman Lofgren.

What the Congresswoman was saying was this:

- The only way to convince Washington to drop this issue for good is to show that artists and musicians can get paid on the Internet.

- Currently they are not seeing any evidence of this. The Congresswoman believes that new technology needs to be developed to let artists get paid. I believe she is entirely wrong about this; see below.

- The arguments that have been raised by tech companies and civil liberties groups in Washington all center around free speech; there is nothing wrong with that but it is not a viable strategy in the long run because the issue is going to keep coming back.

Arvind’s response is that the technology needed is already here. That’s old news to technologists, but the technology sector needs to educate Congress, who may not have the time and skills to get this information by themselves.

The dinosaurs of the content industries need to adapt their business models. Piracy is not correlated with a decrease in sales. Piracy happens not because it is cheaper, but because it is more convenient. Businesses need to compete with piracy rather than trying to outlaw it. Artists who’ve understood this are already thriving.

Tags: business models, censorship, content industry, free speech, monetization

Posted in future of publishing, information ecosystem, intellectual freedom | Comments (0)

by jodi

Holding on to old business models is not the way to endear yourself to customers.

But unfortunately this is also, simultaneously, a bad time to be a reader. Because the dinosaurs still don’t get it. Ten years of object lessons from the music industry, and they still don’t get it. We have learned, painfully, that media consumers—be they listeners, watchers, or readers—want one of two things:

- DRM-free works for a reasonable price

- or, unlimited single-payment subscription to streaming/DRMed works

Give them either of those things, and they’ll happily pay. Look at iTunes. Look at Netflix. But give them neither, and they’ll pirate. So what are publishers doing?

- Refusing to sell DRM-free books. My debut novel will be re-e-published by the Friday Project imprint of HarperCollins UK later this year; both its editor and I would like it to be published without DRM; and yet I doubt we will be able to make that happen.

- crippling library e-books

- and not offering anything even remotely like a subscription service.

– Jon Evans, When Dinosaurs Ruled the Books, via James Bridle’s Stop Press

Eric Hellman is one of the pioneers of tomorrow’s ebook business models: his company, Gluejar, uses a crowdfunding model to re-release books under Creative Commons licenses. Authors and publishers are paid; fans pay for the books they’re most interested in; and everyone can read and distribute the resulting “unglued” ebooks. Everybody wins.

Tags: business models, content, DRM, ebooks, publishing, subscriptions

Posted in books and reading, future of publishing, information ecosystem | Comments (0)

by jodi

We’ve extended the STLR 2011 deadline due to several requests; submissions are now due May 8th.

JCDL workshops are split over two half-days, and we are lucky enough to have *two* keynote speakers: Bernhard Haslhofer of the University of Vienna and Cathy Marshall of Microsoft Research.

Consider submitting!

CALL FOR PARTICIPATION

The 1st Workshop on Semantic Web Technologies for Libraries and Readers

STLR 2011

June 16 (PM) & 17 (AM) 2011

http://stlr2011.weebly.com/

Co-located with the ACM/IEEE Joint Conference on Digital Libraries (JCDL) 2011 Ottawa, Canada

While Semantic Web technologies are successfully being applied to library catalogs and digital libraries, the semantic enhancement of books and other electronic media is ripe for further exploration. Connections between envisioned and emerging scholarly objects (which are doubtless social and semantic) and the digital libraries in which these items will be housed, encountered, and explored have yet to be made and implemented. Likewise, mobile reading brings new opportunities for personalized, context-aware interactions between reader and material, enriched by information such as location, time of day and access history.

This full-day workshop, motivated by the idea that reading is mobile, interactive, social, and material, will be focused on semantically enhancing electronic media as well as on the mobile and social aspects of the Semantic Web for electronic media, libraries and their users. It aims to bring together practitioners and developers involved in semantically enhancing electronic media (including documents, books, research objects, multimedia materials and digital libraries) as well as academics researching more formal aspects of the interactions between such resources and their users. We also particularly invite entrepreneurs and developers interested in enhancing electronic media using Semantic Web technologies with a user-centered approach.

We invite the submission of papers, demonstrations and posters which describe implementations or original research that are related (but are not limited) to the following areas of interest:

- Strategies for semantic publishing (technical, social, and economic)

- Approaches for consuming semantic representations of digital documents and electronic media

- Open and shared semantic bookmarks and annotations for mobile and device-independent use

- User-centered approaches for semantically annotating reading lists and/or library catalogues

- Applications of Semantic Web technologies for building personal or context-aware media libraries

- Approaches for interacting with context-aware electronic media (e.g. location-aware storytelling, context-sensitive mobile applications, use of geolocation, personalization, etc.)

- Applications for media recommendations and filtering using Semantic Web technologies

- Applications integrating natural language processing with approaches for semantic annotation of reading materials

- Applications leveraging the interoperability of semantic annotations for aggregation and crowd-sourcing

- Approaches for discipline-specific or task-specific information sharing and collaboration

- Social semantic approaches for using, publishing, and filtering scholarly objects and personal electronic media

IMPORTANT DATES

*EXTENDED* Paper submission deadline: May 8th 2011

Acceptance notification: June 1st 2011

Camera-ready version: June 8th 2011

KEYNOTE SPEAKERS

PROGRAM COMMITTEE

Each submission will be independently reviewed by 2-3 program committee members.

- India Amos, Textist, Design Editor at Jubilat, USA

- Emmanuelle Bermes, Centre Pompidou Virtuel, France

- Mark Bernstein, Eastgate Systems Inc., USA

- Uldis Bojars, National Library of Latvia, Latvia

- Peter Brantley, Internet Archive, USA

- Dan Brickley, Vrije University Amsterdam, Netherlands

- Guillaume Cabanac, University of Toulouse, France

- Tyng-Ruey Chuang, Acamedia Sinica, Taiwan

- Paolo Ciccarese, Harvard Medical School, USA

- Tim Clark, Harvard Medical School, USA

- Liza Daly,Threepress Consulting Inc., USA

- Kai Eckert, Mannheim University Library, Germany

- Tudor Groza, University of Queensland, Australia

- Michael Hausenblas, DERI, National University of Ireland Galway, Ireland

- Antoine Isaac, Vrije University of Amsterdam, Netherlands

- Piotr Kowalczyk, Poland

- Brian O’Leary, Magellan Media Partners, USA

- Steve Pettifer, University of Manchester, UK

- Ryan Shaw, University of North Carolina, USA

- Ross Singer, Talis, USA

- William Waites, Open Knowledge Foundation, UK

- Rob Warren, University of Waterloo, Canada

ORGANIZING COMMITTEE

- Alison Callahan, Dept of Biology, Carleton University, Ottawa, Canada

- Dr. Michel Dumontier, Dept of Biology, Carleton University, Ottawa, Canada

- Jodi Schneider, DERI, NUI Galway, Ireland

- Dr. Lars Svensson, German National Library

SUBMISSION INSTRUCTIONS

Please use PDF format for all submissions. Semantically annotated versions of submissions, and submissions in novel digital formats, are encouraged and will be accepted in addition to a PDF version.

All submissions must adhere to the following page limits:

Full length papers: maximum 8 pages

Demonstrations: 2 pages

Posters: 1 page

Use the ACM template for formatting: http://www.acm.org/sigs/pubs/proceed/template.html

Submit using EasyChair: https://www.easychair.org/conferences/?conf=stlr2011

Tags: annotation, CFP, context-aware, ebooks, electronic media, ePub, JCDL2011, LLD, location-based storytelling, multimedia collections, recommendations, semantic libraries, STLR2011, workshops

Posted in future of publishing, library and information science, PhD diary, scholarly communication, semantic web, social semantic web | Comments (2)

by jodi

These days my main foray into reference territory is when my colleagues are looking for things.

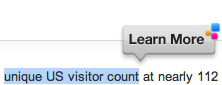

While looking for an update on some social media statistics, I encountered a “get more info” interface that didn’t annoy me, at VentureBeat.

When you highlight text (and only when you highlight text), a small, “Learn More” balloon appears. When you click “Learn More” (and only when you click “Learn More”), further info appears.

When debating whether to include more information or less, this strategy deserves consideration.

It reminds me of Barend Mons’ derisive phrase a Christmas tree of hyperlinks; while he was mainly referencing the lack of information, I also imagine in that phrase the reader’s overwhelm when everything is hyperlinked.

There is, of course, a tradeoff, between hiding information/requiring clicks and presenting everything at once.

Tags: "Learn More", hyperlinks, hypertext, interfaces

Posted in random thoughts | Comments (0)

by jodi

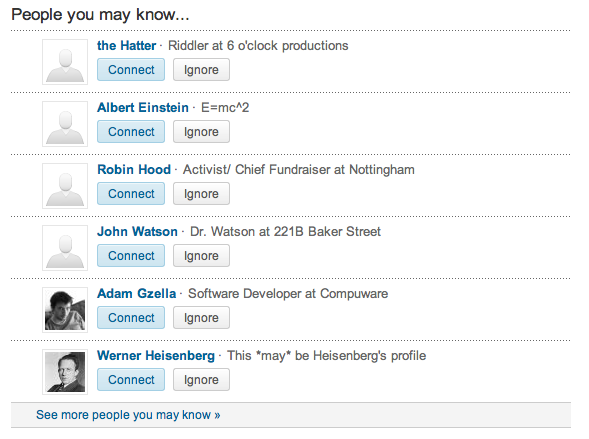

April Fools!

Alas, you need an email address to actually connect!

Tags: aprilfools, aprilfools11, aprilfools2011, linkedin

Posted in random thoughts, social web | Comments (0)

by jodi

To support reading, think about diversity of reading styles.

A study of “How examiners assess research theses” mentions the diversity:

[F]our examples give a good indication of the range of ‘reading styles’:

- A (Hum/Male/17) sets aside time to read the thesis. He checks who is in the references to see that the writers are there who should be there. Then he reads slowly, from the beginning like a book, but taking copious notes.

- B (Sc/Male/22) reads the thesis from cover to cover first without doing anything else. For the first read he is just trying to gain a general impression of what the thesis is about and whether it is a good thesis—that is, are the results worthwhile. He can also tell how much work has actually been done. After the first read he then ‘sits on it’ for a while. During the second reading he starts making notes and reading more critically. If it is an area with which he is not very familiar, he might read some of the references. He marks typographical errors, mistakes in calculations, etc., and makes a list of them. He also checks several of the references just to be sure they have been used appropriately.

- C (SocSc/Female/27) reads the abstract first and then the introduction and the conclusion, as well as the table of contents to see how the thesis is structured; and she familiarises herself with appendices so that she knows where everything is. Then she starts reading through; generally the literature review, and methodology, in the first weekend, and the findings, analysis and conclusions in the second weekend. The intervening week allows time for ideas to mull over in her mind. On the third weekend she writes the report.

- D (SocSc/Male/15) reads the thesis from cover to cover without marking it. He then schedules time to mark it, in about three sittings, again working from beginning to end. At this stage he ‘takes it apart’. Then he reads the whole thesis again.

from [cite source=’doi’]10.1080/0307507022000011507[/cite] Mullins, G. & Kiley, M. (2002), It’s a PhD, not a Nobel Prize: how experienced examiners asses research theses, Studies in Higher Education, 27, 4, pp.369-386. DOI:10.1080/0307507022000011507

Parenthetical comments are (discipline/gender/interview number). Thanks to the NUIG Postgrad Research Society for suggesting this paper.

Posted in books and reading, higher education, PhD diary, scholarly communication | Comments (0)

by jodi

I spoke about my first year Ph.D. research in December at DERI. The topic of my talk: Wikipedia discussions and the nascent World Wide Argument Web. I was proud to have the video (below) posted to our institute video stream.

The Wikipedia research is drawn from our ACM Symposium on Applied Computing paper:

Jodi Schneider, Alexandre Passant, John G. Breslin, “Understanding and Improving Wikipedia Article Discussion Spaces.” In SAC 2011 (Web Track), TaiChung, Taiwan, March 21-25, 2011.

Jodi Schneider – Constructing knowledge through argument: Wikipedia and World Wide Argument Web from DERI, NUI Galway on Vimeo.

This is ongoing work, and feedback is most welcome.

Posted in argumentative discussions, PhD diary, social semantic web | Comments (0)

by jodi

Can you distinguish what is being said from how it is said?

In other words, what is a ‘proposition’?

Giving an operational definition of ‘proposition’ or of ‘propositional content’ is difficult. Turns out there’s a reason for that:

Metadiscourse does not simply support propositional content: it is the means by which propositional content is made coherent, intelligible and persuasive to a particular audience.

– Ken Hyland Metadiscourse p39 ((I’m really enjoying Ken Hyland’s Metadiscourse. Thanks to Sean O’Riain for a wonderful loan! I’m not ready to summarize his thoughts about what metadiscourse is — for one thing I’m only halfway through.)).

I’m very struck by how the same content can be wrapped with different metadiscourse — resulting in different genres for distinct audiences. When the “same” content is reformulated, new meanings and emphasis may be added along the way. Popularization of science is rich in examples.

For instance, a Science article…

When branches of the host plant having similar oviposition sites were placed in the area, no investigations were made by the H. hewitsoni females.

gets transformed into a Scientific American article…

I collected lengths of P. pittieri vines with newly developed shoots and placed them in the patch of vines that was being regular revisited. The females did not, however, investigate the potential egg-laying sites I had supplied.

This shows the difficulty of making clean separations between the content and the metadiscourse:

“The ‘content’, or subject matter, remains the same but the meanings have changed considerably. This is because the meaning of a text is not just about the propositional material or what the text could be said to be about. It is the complete package, the result of an interactive process between the producer and receiver of a text in which the writer chooses forms and expressions which will best convey his or her material, stance and attitudes.

– Ken Hyland Metadiscourse p39

Example from Hyland (page 21), which credits Myers Writing Biology: Texts in the Social Construction of Scientific Knowledge 1990 (180).

Tags: aboutness, audience, context, genre theory, meaning, metadiscourse, popularization of science, scientific communication

Posted in argumentative discussions, PhD diary, scholarly communication | Comments (0)