Can you distinguish what is being said from how it is said?

In other words, what is a ‘proposition’?

Giving an operational definition of ‘proposition’ or of ‘propositional content’ is difficult. Turns out there’s a reason for that:

Metadiscourse does not simply support propositional content: it is the means by which propositional content is made coherent, intelligible and persuasive to a particular audience.

– Ken Hyland Metadiscourse p39 ((I’m really enjoying Ken Hyland’s Metadiscourse. Thanks to Sean O’Riain for a wonderful loan! I’m not ready to summarize his thoughts about what metadiscourse is — for one thing I’m only halfway through.)).

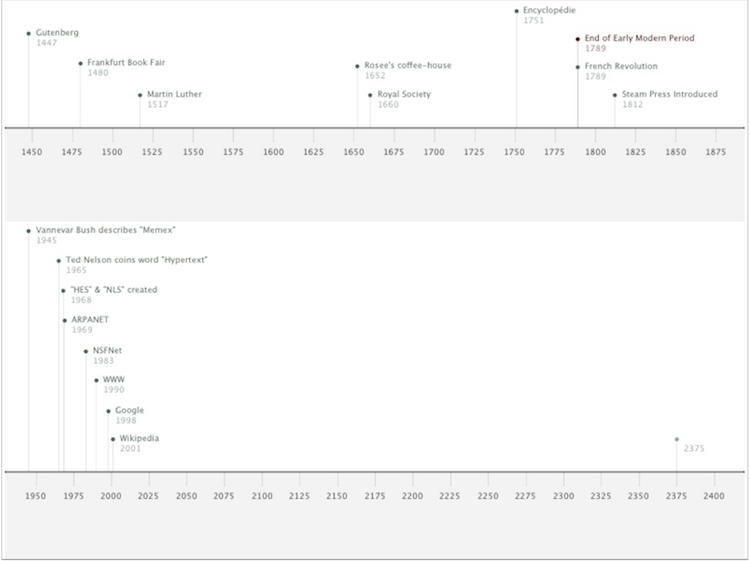

I’m very struck by how the same content can be wrapped with different metadiscourse — resulting in different genres for distinct audiences. When the “same” content is reformulated, new meanings and emphasis may be added along the way. Popularization of science is rich in examples.

For instance, a Science article…

When branches of the host plant having similar oviposition sites were placed in the area, no investigations were made by the H. hewitsoni females.

gets transformed into a Scientific American article…

I collected lengths of P. pittieri vines with newly developed shoots and placed them in the patch of vines that was being regular revisited. The females did not, however, investigate the potential egg-laying sites I had supplied.

This shows the difficulty of making clean separations between the content and the metadiscourse:

“The ‘content’, or subject matter, remains the same but the meanings have changed considerably. This is because the meaning of a text is not just about the propositional material or what the text could be said to be about. It is the complete package, the result of an interactive process between the producer and receiver of a text in which the writer chooses forms and expressions which will best convey his or her material, stance and attitudes.

– Ken Hyland Metadiscourse p39

Example from Hyland (page 21), which credits Myers Writing Biology: Texts in the Social Construction of Scientific Knowledge 1990 (180).