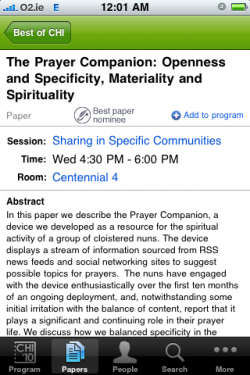

via Gene Golovchinsky, I learned of an iphone app for CHI2010. What a great way to amplify the conference! Thanks to Justin Weisz and the rest of the CMU crew.

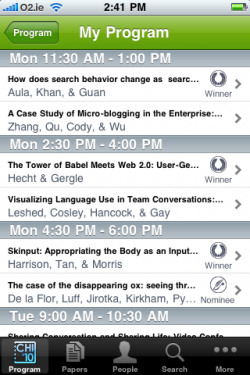

I was happy to browse the proceedings while lounging. The papers I mark show up in my personal schedule and in a reading list.

I think it’s an attractive alternative to making a paper list by hand, using some conferences’ clunky online scheduling tool, or circling events in large conference handouts. If you keep an iPhone/iPod in your pocket, the app could be used during the conference, but I might also want to print out my sessions on an index card. So exporting the list would be a good enhancement: in addition to printing, I’d like to send the list of readings directly to Zotero (or another bibliographic manager).

The advance program embedded on the conference website still has some advantages: it’s easier to find out more about session types (e.g. alt.chi). Courses and workshops stand out online, too.

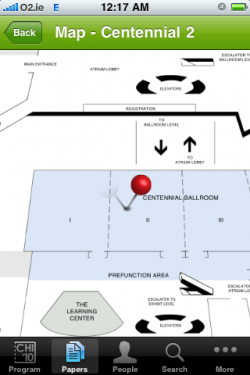

Wayfinding is hard in on-screen PDFs, so I hope that in the long run scholarly proceedings become more screen-friendly. While at present I find an iPhone appealing for reading fiction, on-screen scholarly reading is harder: for one thing, it’s not linear.

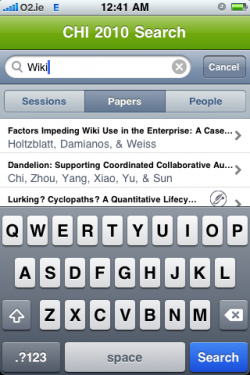

I’d like to see integrated, reader-friendly environments for conference proceedings, with full-text papers. I envision moving seamlessly between the proceedings and an offline reading environment. Publishers can already support offline reading on a wide variety of smartphones: the HTML5-based Ibis Reader uses ePub, a standard based on xHTML and CSS. There’s no getting around the download step, but an integrated environment can be “download first, choose later”. I’ve never had much luck with CD-ROM and USB-based conference proceedings, except in pulling off 2-3 PDFs of papers to read later.